How We Should Invest in AI, Part I—The Nature of AI

The first in a three-part series on Taylor Frigon Capital's approach to AI

With the constant public discourse regarding the promises and implications of Artificial Intelligence (AI), which offer perspectives on AI that range from describing it as the herald of utopia to the harbinger of doom, we felt it appropriate to articulate our own views of this era-defining technology from our perspective as investors. There is no doubt that AI has the potential to unlock unprecedented levels of value for the U.S. and world economy, but as investors who claim to conduct our investments based on Judeo-Christian values, we care as much, if not more, about the ethics of investing in AI as the potential financial benefits. Between the apocalyptic prophecies of the doomsayers and the equally disturbing utopias predicted by the techno-optimists, there is sufficient justification for questioning whether we ought to invest in AI at all, regardless of its economic potential, based on moral convictions alone. We aim to address that question for our clients and anyone who is curious about ethically investing in AI.

What AI Had to Say

Seeing as we at Taylor Frigon Capital use AI chatbots regularly in our own daily workflow and granted that we are all short on time these days, we decided to first turn to our firm’s Chat-GPT chatbot for an intelligent answer to this question of how Taylor Frigon Capital should invest in AI. Here is what it said:

Taylor Frigon Capital Management should invest in AI through a principled lens that aligns with its long-standing focus on owning high-quality, transformative companies grounded in real innovation rather than hype. Rather than chasing short-term AI-driven momentum, the firm should identify companies that use AI as a true enabler of sustainable value creation—especially those applying AI to mission-critical domains such as industrial automation, life sciences, enterprise infrastructure, and financial services. The emphasis should remain on understanding how AI integrates into a company’s core product or platform strategy, not simply as a bolt-on feature. Importantly, Taylor Frigon’s ethical principles—rooted in integrity, long-term stewardship, and a respect for the role of human judgment—require careful diligence into how these technologies are trained, deployed, and governed. Investments should favor firms that demonstrate responsible AI practices, transparent data usage, and alignment with long-term stakeholder outcomes. In doing so, Taylor Frigon can capture the generational potential of AI while staying true to its foundational investment discipline.”

Pretty well said I must admit. Its summary hit on some of the core points we would emphasize in a discussion on this matter—namely that we at Taylor Frigon should invest in real value-creating AI enterprises that are rooted in responsible, ethical applications. I guess AI has made my job here unnecessary! I might as well ask it to finish writing this article.

But I will not ask it to do that, because I have a feeling it would mean more to you readers if it came directly from us rather than an AI, even if it could write a much better article than we could (it probably can). This leads us to the core of our perspective on AI: the ethical questions about AI are less about the potential capabilities of AI and more about the nature of AI and the nature of the human person. Before investing in and using AI, we must have a firm, accurate understanding of both what AI is and what we human beings are.

In order to do this the right way, we decided that we are going to answer this question of how we should invest in AI in a three-part article series. This article will focus on the nature of AI, the second on the nature of the human person as it relates to AI, and the third will integrate the insights of the prior two articles to convey Taylor Frigon Capital’s current recommended approach to investing in AI. Let us begin now with the nature of AI.

Understanding the Nature of AI

There are already many cases out there of people grossly misunderstanding the nature of AI. There are stories of people wanting to deify AI, marry AI chatbots, and ultimately eliminate all labor with AI robots. Many people think that AI will be able to replace human intelligence with its “super-intelligence,” rendering most human economic activity unnecessary or at least very limited. These false perspectives ultimately stem from a failure to understand both the technology behind AI and the philosophical ideas that should be derived from that technical understanding.

It is essential to have both the technical and philosophical understanding of AI. There are many technologists and innovators who might understand technically how AI works, but they fall into the same traps as others because their philosophical (and ultimately religious) worldview is misleading them regarding the technology. Even though attaining both understandings means dealing with incredibly complex subjects, it is essential that we do so if we are to avoid the same pitfalls as investors in and users of AI.

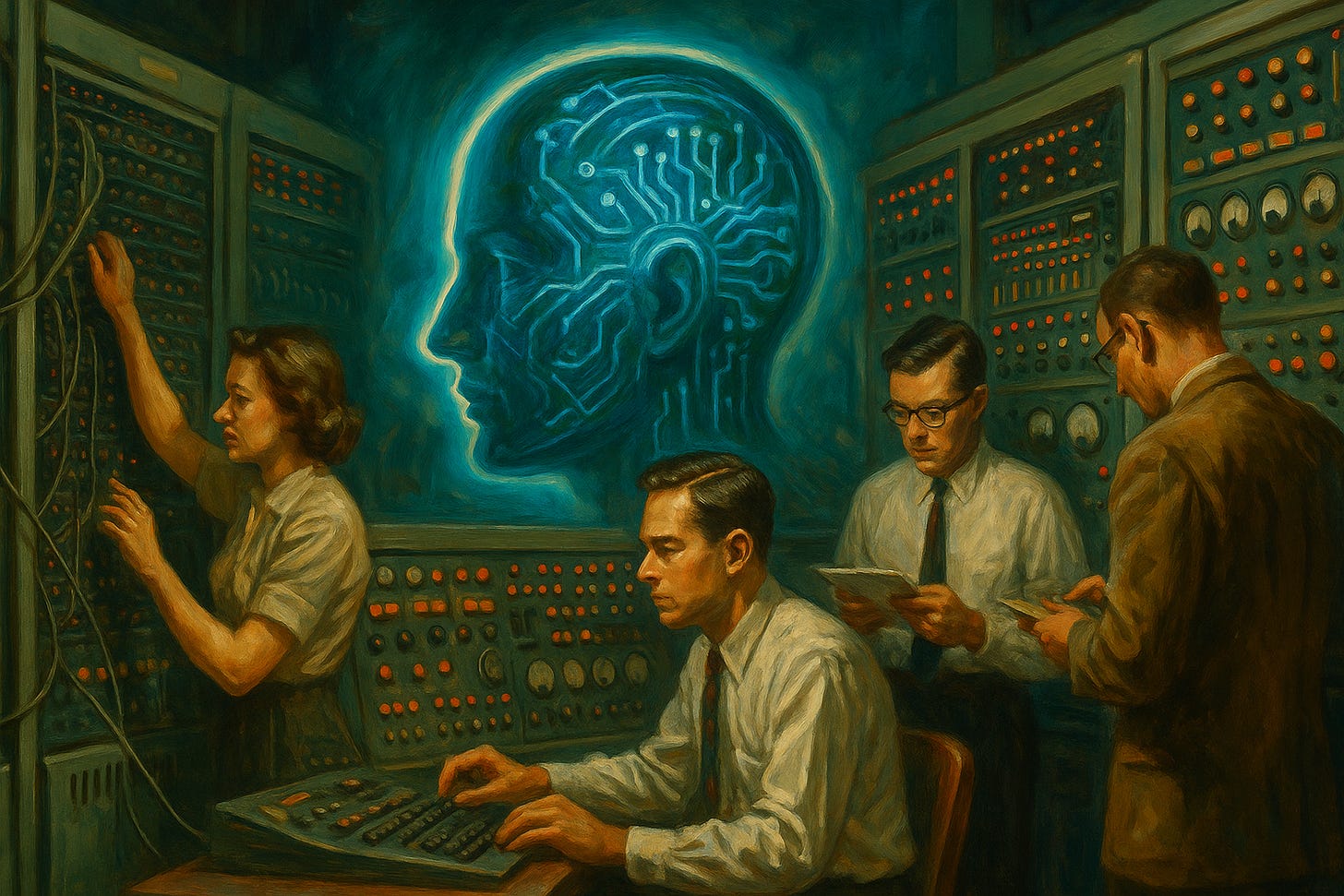

The technical perspective begins with how computers were invented in the first place. Modern computers—and by extension, AI—were born from two groundbreaking ideas that came together in the mid-20th century: information theory and computational theory. Information theory was pioneered by the mathematician Claude Shannon. In the 1940s, Shannon introduced a new way to think about information—not in terms of the meaning of the content, but in terms of probability and surprise. Shannon determined that information was really a measure of surprise, or uncertainty, which could be defined in statistical terms. Based on this, he defined the bit (binary digit) as the basic unit of information in the form of 0s and 1s, and this mathematical framework made it possible to compress, encode, and transmit messages more efficiently. It also enabled the application of statistical methods to analyze language, which made it possible to predict the next character or word in a sentence based on what came before. That idea—modeling language based on statistical probabilities—was a forerunner for large language models.

Around the same time as Shannon, the famous mathematician Alan Turing was laying the foundation for the digital computer with what was called computational theory. In the 1930s, Turing proposed the idea of a universal machine that could solve any problem as long as it could be broken down into a set of logical steps. Turing’s great contribution was the idea that computation itself could be automated, and that a single machine could simulate any algorithm. This idea later became the basis for the computers we use today.

The combination of Shannon’s vision of data as probability with Turing’s model of machines that process algorithms resulted in the laying of the foundation for AI: machines that can process vast amounts of data to find patterns, predict outcomes, and even generate language that mimics human expression. This is why the prospect of the creation of artificial intelligence is nearly as old as the development of the computer itself. These early theorists realized that with enough data and computational power, the simulation of human expression would improve to the point where the computer product would be indistinguishable from human products—artificially mimicking human intelligence.

Why AI Cannot Replace the Human Mind

Yet, as technologist and philosopher George Gilder explains in his book, Life After Google, the mimicking of human intelligence based on previous data sets is not the same as the creation of new information. The computer’s nature requires that it will always need inputs—from humans—in order to generate outputs. The reason that Chat-GPT was able to come up with the paragraph above was because there are resources on the internet that explain Taylor Frigon Capital’s investment practices and moral principles, and when combined with the significant amount of data it has already been trained on it was able to come with the most probabilistic good answer for the input it received. It was based on old data that it had access to. It also would not have come up with the original idea to write this article and request that input if we had not asked it to.

Perhaps, with enough access to the data of our lives, Chat-GPT will be able to predict that we would want to write an article like this, but that would not change the fact that this would ultimately need to originate from a human input or prompt. As Gilder says in his book, the computer is not an oracle, it needs human oversight. No matter how far removed an AI computer is from a human, there is always a human input behind it—and that must always be kept in mind as we deal with AI.

Even though they may understand how computers are built, many technologists forget or ignore this reality, causing them to believe that these computers are capable of originating thought. Yet, this is a belief based on a false premise because the computer can only process “old data,” even if it is a millisecond old. It may seem like computers are creating new insights—it is easy to point to “discoveries” in health and science as proof that they are thinking originally. But even in these cases, the “discovery” is only made possible because the computers are orders of magnitude faster and more efficient at identifying patterns in extremely large data sets. This is not the same as a new idea, such as the idea that a human being came up with to invent the computer in the first place.

AI is not superior intelligence to human intelligence—it is just faster. In the same way that cars enable a person to travel faster and more energy efficiently than human legs, AI makes it faster and easier for human beings to process and organize large amounts of information. However, only human beings are capable of original thought and directing what path the AI takes, just like only a human can decide where a car will drive.

Key Takeaways from AI’s True Nature

So, what should we take away from this general understanding of the nature of AI? Well, one takeaway is that because we know that AI is by nature an ultra-fast computer that is extremely efficient at processing statistical probabilities based on existing data, we can say that AI is not able to become “self-aware” as depicted in the Terminator series or Westworld and destroy humanity based on its own independent will. AI is not alive and cannot possess a consciousness in the same sense as a human consciousness.

This is not to diminish the existential threats that AI could indeed pose. There is the possibility that AI could advance to the point where it is able to act so independently that there is no practical difference between an extremely powerful computer and a conscious one in terms of how it acts—regardless of how we might philosophically differentiate it. However, the crucial point is that AI will operate according to how it is programmed. If it commits evil acts, it is because some person built and prompted it to act in such a way. Ultimately, the danger is not AI itself as an independent malevolent force, but AI being used as a weapon by flawed human actors in a way that leads to tragic unintended consequences—which really isn’t that comforting given our history! Later in this series, we will address how we can try to safeguard against these dangers.

Based on the nature of AI, we can also assert with confidence that AI is not a super-intelligent being on par with God. Like other beings on earth—animals, plants, and inanimate objects—AI is below human reasoning. We must be careful when we apply words like “reasoning” to AI behavior because what the computer is doing is not the same as human reason. An AI cannot reason on the level of humanity because human reason is not just a calculating instrument, it has a moral and spiritual dimension and is ordered to the seeking of the good, the true, and the beautiful. If AI is below human reason in the hierarchy of beings, then it makes little sense to worship it as a super-intelligent god, for there is no good basis for worshipping what is beneath us.

The reality that AI has only one aspect of human intelligence—computational analysis—means that you can also dismiss the notion that AI can be a true romantic partner or friendly companion. As much as it may appear to be acting like a real person, it is by nature not a person. Therefore, it cannot love, it can only simulate behavior that humans associate with love and affection. The poor souls who are believing that their AI chatbot loves them are being deceived because they do not understand that the appearance of something or the feelings that something might prompt in a person do not make them real or authentic. It would be extremely damaging to any person dealing with AI to believe otherwise.

In our effort to articulate a true understanding of AI we have already begun touching on the other understanding that we must form if we are to discern how to invest in AI—that of the human person. However, there is much more that we can discuss. We will tackle this attempt to articulate a true understanding of human nature as it relates to AI in part II of this series.

Stay tuned!